Tcpreplay1 was designed to replay traffic previously captured in the pcap format back onto the wire for testing NIDS and other passive devices. Over time, it was enhanced to be able to test in-line network devices. However, a re-occurring feature request for tcpreplay is to connect to a server in order to test applications and host TCP/IP stacks. It was determined early on, that adding this feature to tcpreplay was far too complex, so I decided to create a new tool specifically designed for this.

Flowreplay is designed to replay traffic at Layer 4 or 7 depending on the protocol rather then at Layer 2 like tcpreplay does. This allows flowreplay to connect to one or more servers using a pcap savefile as the basis of the connections. Hence, flowreplay allows the testing of applications running on real servers rather then passive devices.

Flowreplay must be able to process multiple connections to one or more devices. There are two options:

Although using libpcap/libnet would allow more simultaneous connections and greater flexibility, there would be a very high complexity cost associated with it. With that in mind, I've decided to use sockets to send and receive data.

Because a pcap file can contain multiple simultaneous flows, we need to be able to support that too. The biggest problem with this is reading packet data in a different order then stored in the pcap file.

Reading and writing to multiple sockets is easy with select() or poll(), however a pcap file has it's data stored serially, but we need to access it randomly. There are a number of possible solutions for this such as caching packets in RAM where they can be accessed more randomly, creating an index of the packets in the pcap file, or converting the pcap file to another format altogether. Alternatively, I've started looking at libpcapnav5 as an alternate means to navigate a pcap file and process packets out of order.

Knowing when to start sending client traffic in response to the server will be "tricky". Without understanding the actual protocol involved, probably the best general solution is waiting for a given period of time after no more data from the server has been received. Not sure what to do if the client traffic doesn't elicit a response from the server (implement some kind of timeout?). This will be the basis for the default plug-in.

Dealing with IP fragmentation and TCP stream reassembly will be another really complex problem. We're basically talking about implementing a significant portion of a TCP/IP stack. One thought is to use libnids6 which basically implements a Linux 2.0.37 TCP/IP stack in user-space. Other solutions include porting a TCP/IP stack from Open/Net/FreeBSD or writing our own custom stack from scratch.

The biggest asynchronous problem, that pcap files are serial, has to be solved in a scaleable manner. Not much can be assumed about the network traffic contained in a pcap savefile other then Murphy's Law will be in effect. This means we'll have to deal with:

There are five major complications with flowreplay:

Missing packets in the pcap file will probably make that flow unplayable. There are proabably certain situation where we can make an educated guess, but this is far too complex to worry about for the first stable release.

That still leaves creating a basic TCP/IP stack in user space. The good news it that there is already a library which does this called libnids. As of version 1.17, libnids can process packets from a pcap savefile (it's not documented in the man page, but the code is there).

A potential problem with libnids though is that it has to maintain it's own state/cache system. This not only means additional overhead, but jumping around in the pcap file as I'm planning on doing to handle multiple simultaneous flows is likely to really confuse libnids' state engine. Also, libnids is licensed under the GPL, but I want flowreplay released under a BSD-like license; I need to research if the two are compatible in this way.

Possible solutions:

As earlier stated, one of the main goals of this project is to keep things single threaded to make coding plugins easier. One caveat of that is that any function which blocks will cause serious problems.

There are three major cases where blocking is likely to occur:

It is possible to do non-blocking IO on sockets by setting the O_NONBLOCK flag on a socket file descriptor using fcntl(2). Then all operations that would block will (usually) return with EAGAIN (operation should be retried later); connect(2) will return EINPROGRESS error. The user can then wait for various events via poll(2) or select(2).7If connect() returns EINPROGRESS, then we'll just have to do something like this:

if (getsockopt(conn->s, SOL_SOCKET, SO_ERROR, &e, &len) < 0) {

/* not yet */

if(errno != EINPROGRESS){ /* yuck. kill it. */

log_fn(LOG_DEBUG,"in-progress connect failed. Removing.");

return -1;

} else {

return 0; /* no change, see if next time is better */

}

}

/* the connect has finished. */

Note: It may not be totally right, but it works ok. (that chunk of code gets called after poll returns the socket as writable. if poll returns it as readable, then it's probably because of eof, connect fails. You must poll for both.

As stated before, the pcap file format really isn't well suited for flowreplay because it uses the raw packet as a container for data. Flowreplay however isn't interested in packets, it's interested in data streams8 which may span one or more TCP/UDP segments, each comprised of an IP datagram which may be comprised of multiple IP fragments. Handling all this additional complexity requires a full TCP/IP stack in user space which would have additional feature requirements specific to flowreplay.

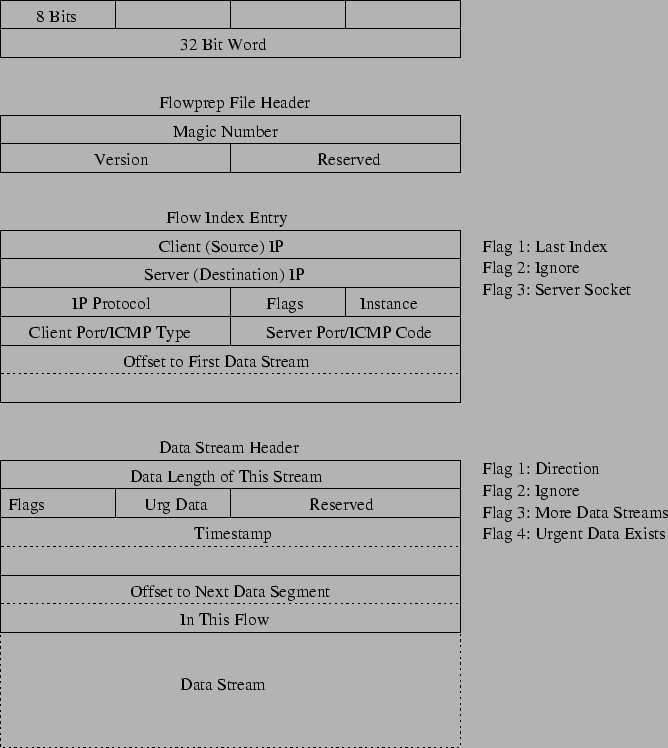

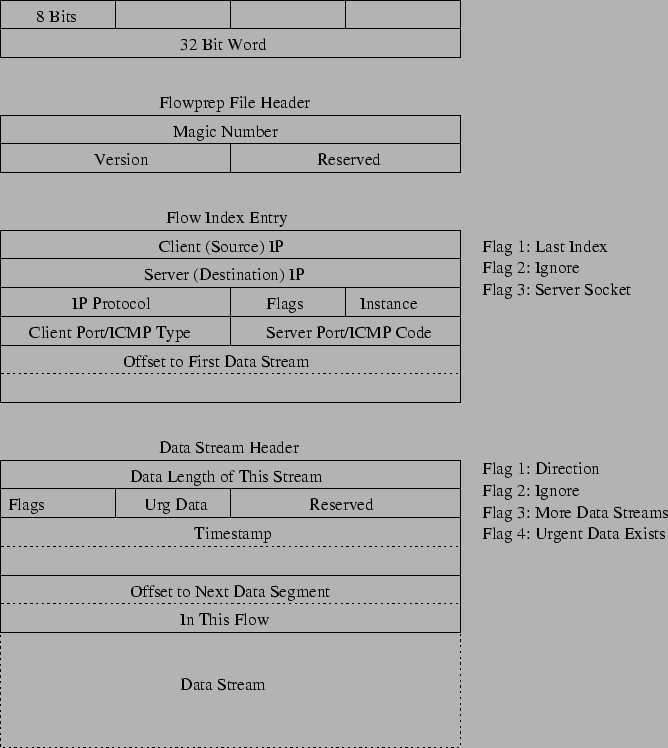

Rather then trying to do that, I've decided to create a pcap preprocessor for flowreplay called: flowprep. Flowprep will handle all the TCP/IP defragmentation/reassembly and write out a file containing the data streams for each flow.

A flow file will contain three sections:

At startup, the file header is validated and the data stream indexes are loaded into memory. Then the first data stream header from each flow is read. Then each flow and subsequent data stream is processed based upon the timestamps and plug-ins.

Plug-ins will provide the ``intelligence'' in flowreplay. Flowreplay is designed to be a mere framework for connecting captured flows in a flow file with socket file handles. How data is processed and what should be done with it will be done via plug-ins.

Plug-ins will allow proper handling of a variety of protocols while hopefully keeping things simple. Another part of the consideration will be making it easy for others to contribute to flowreplay. I don't want to have to write all the protocol logic myself.

Each plug-in provides the logic for handling one or more services. The main purpose of a plug-in is to decide when flowreplay should send data via one or more sockets. The plug-in can use any non-blocking method of determining if it appropriate to send data or wait for data to received. If necessary, a plug-in can also modify the data sent.

Each time poll() returns, flowreplay calls the plug-ins for the flows which either have data waiting or in the case of a timeout, those flows which timed out. Afterwords, all the flows are processed and poll() is called on those flows which have their state set to POLL. And the process repeats until there are no more nodes in the tree.

Initially, flowreplay will ship with one basic plug-in called ``default''. Any flow which doesn't have a specific plug-in defined, will use default. The goal of the default plug-in is to work ``good enough'' for a majority of single-flow protocols such as SMTP, HTTP, and Telnet. Protocols which use encryption (SSL, SSH, etc) or multiple flows (FTP, RPC, etc) will never work with the default plug-in. Furthermore, the default plug-in will only support connectionsto a server, it will not support accepting connections from clients.

The default plug-in will provide no data level manipulation and only a simple method for detecting when it is time to send data to the server. Detecting when to send data will be done by a ``no more data'' timeout value. Basically, by using the pcap file as a means to determine the order of the exchange, anytime it is the servers turn to send data, flowreplay will wait for the first byte of data and then start the ``no more data'' timer. Every time more data is received, the timer is reset. If the timer reaches zero, then flowreplay sends the next portion of the client side of the connection. This is repeated until the the flow has been completely replayed or a ``server hung'' timeout is reached. The server hung timeout is used to detect a server which crashed and never starts sending any data which would start the ``no more data'' timer.

Both the ``no more data'' and ``server hung'' timers will be user defined values and global to all flows using the default plug-in.

Each plug-in will be comprised of the following:

This document was generated using the LaTeX2HTML translator Version 2002-2-1 (1.70)

Copyright © 1993, 1994, 1995, 1996,

Nikos Drakos,

Computer Based Learning Unit, University of Leeds.

Copyright © 1997, 1998, 1999,

Ross Moore,

Mathematics Department, Macquarie University, Sydney.

The command line arguments were:

latex2html -nonavigation -no_subdir -split 0 -show_section_numbers flowreplay.tex

The translation was initiated by Aaron Turner on 2005-02-10